Island

Desert

ImmerseGen

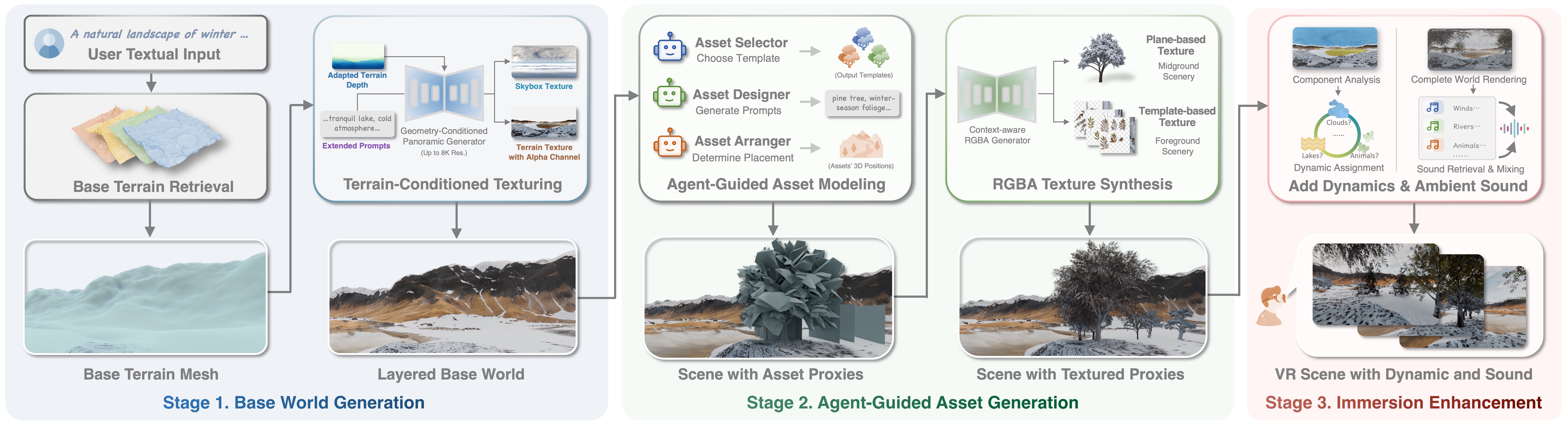

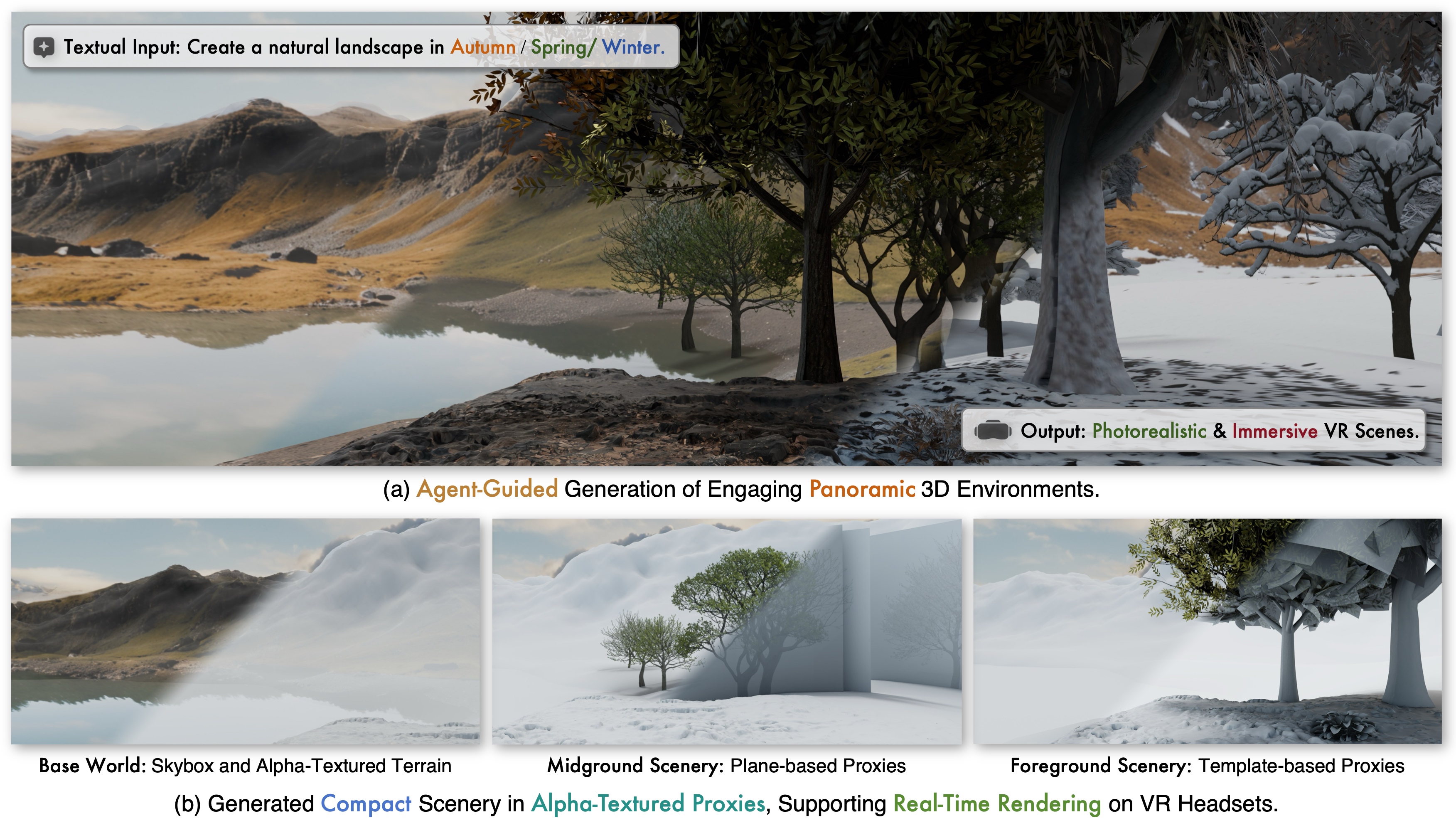

Agent-Guided Immersive World Generation with Alpha-Textured Proxies

ImmerseGen creates panoramic 3D worlds from input prompts by generating compact alpha-textured proxies through agent-guided asset design and arrangement, alleviating the reliance on rich and complex assets while ensuring diversity and realism, which is tailored for immersive VR experience.

Island

Desert

Lake

Anime Room

Forest

Futuristic City